Feature Engineering & Selection in ML: A Comprehensive Guide

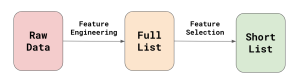

Machine learning workflows heavily rely on feature engineering and feature selection to build accurate and high-performing models. While these two concepts are often confused or used interchangeably, it is essential to understand their distinct objectives to improve your data science workflow and pipelines. In this comprehensive guide, we will explore the concepts of feature engineering and feature selection, their importance, and the various techniques used in each process.

Understanding Features and Feature Matrices

Before delving into feature engineering and feature selection, let’s first establish a clear understanding of what features and feature matrices are. In machine learning, the goal is to predict or infer an output based on a set of training data. Machine learning algorithms typically take in a collection of numeric examples as input. These examples are stacked together to form a two-dimensional “feature matrix.” Each row in the matrix represents an example, while each column represents a specific feature.

Features play a vital role in machine learning as they transform the input data into a format suitable for algorithms. In some cases, where the input already consists of single numeric values per example, no transformation is necessary. However, for most use cases, especially those outside of deep learning, feature engineering is crucial to convert data into a machine learning-ready format. The choice of features significantly impacts both the interpretability and performance of the models deployed by major companies today.

What is Feature Engineering?

Feature engineering involves using domain knowledge to extract new variables from raw data that enhance the performance of machine learning algorithms. In a typical machine learning scenario, data scientists predict quantities using information from various data sources within their organization. These sources often contain multiple tables connected through specific columns.

For example, consider an e-commerce website’s database, which may include a “Customers” table with a row for each customer and an “Interactions” table with information about each customer’s interactions on the site. To predict when a customer will make a purchase, we need a feature matrix with a row for each customer. However, the “Customers” table alone may not provide sufficient relevant information. Here is where feature engineering becomes crucial.

By leveraging the historical data in the “Interactions” table, we can compute aggregate statistics for each customer, such as the average time between past purchases, the total number of past purchases, or the maximum amount spent in previous purchases. These features provide valuable insights into a customer’s behavior and help improve predictive power. Data scientists determine the right set of features based on factors such as data type, size, and their goals, making feature engineering highly specific to each use case and dataset.

Techniques for Feature Engineering

Feature engineering involves a wide range of techniques to process and transform features for machine learning models. Now, let’s delve into some frequently employed techniques:

1. Normalization and Standardization

The Normalization and standardization, essential in feature scaling, adjust data to a specific scale. It confines feature values within 0 to 1 in normalization, while standardization centers data around a mean of 0 and a standard deviation of 1. The choice between normalization and standardization depends on the nature of the data and the model being used.

2. Log Transformations

Log transformations are applied to skewed data to bring it into a specific range. By taking the logarithm of the values, the data can be compressed and centered around a mean value. Log transformations are particularly useful when dealing with data that has a wide range of magnitudes.

3. Feature Encoding

4. Principal Component Analysis (PCA)

Principal Component Analysis (PCA) is a technique used for dimensionality reduction. It takes correlated or uncorrelated features and generates new features called principal components. These components are orthogonal to each other and capture the most important information from the original features. PCA is particularly useful when dealing with datasets with a high number of features.

5. Data Imputation

Data imputation is the process of filling in missing values within the dataset. Techniques such as mean, median, mode imputation, or more advanced methods like zero fill, backward filling, or forward filling can be used to impute missing values. The choice of imputation technique depends on the nature of the missing values and the dataset.

These examples provide just a glimpse into the world of feature engineering techniques. The choice of techniques depends on the specific dataset, industry, and the goals of the data scientist. It is important to experiment with different techniques to identify the most effective ones for a particular machine learning task.

The Significance of Feature Selection

Feature selection, as the name suggests, involves selecting a subset of features from the dataset that have the most significant impact on the dependent variable. The benefits of feature selection include optimized dataset size, reduced memory consumption, improved model performance, and interpretability.

1. Domain Expertise

Domain expertise is a manual method of identifying important variables within a dataset. By consulting with a domain or business expert, data analysts can gain valuable insights and identify the relevant features needed for model building. The expertise of the domain expert can greatly enhance the feature selection process.

2. Missing Values

Dropping features with a high percentage of missing values (typically above 40% to 50%) is recommended, as imputing them using central tendency methods may not yield meaningful results. Removing these features helps in simplifying the dataset and focusing on the remaining relevant features.

3. Correlation

Correlation refers to the relationship between independent features. Highly correlated features can lead to collinearity issues, impacting the model’s weights and overall performance. Utilizing correlation coefficients, like Pearson’s r, can measure the strength and direction of correlation. If features exhibit high correlation, actively eliminating or combining them can reduce redundancy.

4. Multi-collinearity

Multi-collinearity is a form of correlation where the values lie between minus infinity to plus infinity. It can heavily impact regression model coefficient calculations. The Variation Inflation Factor (VIF) is a measure used to identify the presence of multi-collinearity. Features with a VIF greater than 5 are considered highly correlated and may need to be addressed to improve model performance.

5. Zero-Variance Check

Features with zero variance do not provide any relationship between independent and dependent variables. These features can be dropped from the dataset as they do not contribute to the model’s generalization.

6. Feature Derivation

Feature derivation involves creating new features from existing ones. Achieving this involves splitting a column, combining multiple features, or applying domain-specific transformations. For instance, one can split a column with date and time information into separate date and time features. Combining features like selling price and cost price can create a new feature, such as profit, directly applicable for modeling.

Frequently Asked Questions (FAQs)

Here’s a set of frequently asked questions (FAQ) for the topic “A Comprehensive Guide to Feature Engineering and Feature Selection in Machine Learning”:

Q1: What is feature engineering in machine learning?

A1: Feature engineering involves transforming raw data into a format that enhances the performance of machine learning models. It includes creating new features, modifying existing ones, and optimizing the dataset for improved model accuracy.

Q2: Why is feature engineering important in machine learning?

A2: Feature engineering is crucial as it directly impacts a model’s ability to learn patterns from data. Well-crafted features can enhance model performance, increase predictive accuracy, and contribute to more robust and interpretable machine learning models.

Q3: What techniques do practitioners commonly use in feature engineering?

A3: Common techniques include one-hot encoding, scaling, binning, creating interaction terms, and handling missing values. These methods aim to extract relevant information from raw data, making it suitable for machine learning algorithms.

Q4: How does feature selection differ from feature engineering?

A4: Feature selection involves choosing a subset of the most relevant features from the original dataset, discarding irrelevant or redundant ones. Feature engineering focuses on transforming and creating features, while feature selection streamlines the set of features used in a model.

Q5: What challenges do feature engineering and selection present?

A5: Challenges include overfitting, determining which features are truly informative, and finding the right balance between too many and too few features. Understanding the data and the problem domain is critical to overcoming these challenges.

Q6: How does feature engineering impact model interpretability?

A6: Well-engineered features contribute to better model interpretability by highlighting meaningful patterns and relationships in the data. Clear and relevant features make it easier to understand the model’s decision-making process.

Q7: Are there automated tools for feature engineering and selection?

A7: Yes, automated tools and libraries like scikit-learn and feature-engine actively offer functions for both feature engineering and selection. These tools can expedite the process and help discover optimal feature configurations.

Q8: Can feature engineering and selection improve model efficiency?

A8: Yes, efficient feature engineering and selection contribute to improved model efficiency by reducing computational complexity, training time, and the risk of overfitting. This is particularly valuable in scenarios with large datasets or resource constraints.

Q9: How do feature engineering and selection impact different types of machine learning models?

A9: The impact varies across models, but in general, well-engineered and selected features enhance the performance of diverse machine learning models, including linear models, decision trees, and neural networks.

Q10:Should organizations regularly revisit feature engineering, or is it a one-time process?

A10: Engaging in feature engineering is an iterative process. While the initial phase is essential, it is advisable to revisit and refine features based on model performance and evolving data characteristics for sustained model improvement.

Conclusion

In conclusion, feature engineering and feature selection are critical steps in the machine learning workflow. Feature engineering involves extracting meaningful variables from raw data, while feature selection focuses on identifying the most important features for model building. By applying various techniques such as normalization, log transformations, feature encoding, PCA, and data imputation, data scientists can enhance model performance and interpretability.

It is important to understand the specific requirements of the dataset, industry, and machine learning algorithms when selecting and processing features. Additionally, consulting domain experts and leveraging their knowledge can provide valuable insights into feature selection. By combining the power of feature engineering and feature selection, data scientists can build accurate and robust machine learning models that effectively generalize and provide actionable insights.

Remember that feature engineering and feature selection are iterative processes, and experimentation with different techniques is crucial to find the optimal set of features for each machine learning task. By continuously refining and improving features, data scientists can unlock the full potential of their models and drive meaningful results in various domains.