Machine Learning Models

Introduction

People who like technology use the term “machine learning” all the time. Machine learning will become common knowledge. It will become part of the everyday lives of people who aren’t very tech-savvy.

We’ve already talked about machine learning in previous blogs. In today’s discussion, we’ll talk about the machine learning models. How they help computer machines recognize patterns and speed up processes.

What is a Machine Learning Model?

It is computer software that has taught us to look for certain patterns. You teach a model how to think about and learn from a set of data. You also give it an algorithm to use to think about and learn from that data.

To use the model after it has trained, you can think about data it hasn’t seen before and make predictions about that data once you know how it works.

Consider this: You want to make an app that can figure out a person’s emotions based on how they look. You can train a model by giving it images of faces with different emotions labeled on them. Then, you can use that model in an app to figure out the mood of any user at any given time.

A globe is a shape that looks like the Earth but doesn’t look like the shape of a sphere. We can think of the Earth this way because we’re making one.

A natural process decides whether someone will buy something from a website if the world is set up that way. We could make something that looks like that process, in which we give some information about a person and it tells us if that person is likely to buy a certain thing.

As a consequence, a “machine learning model” is a model that a machine learning system generates.

Amazing Models of Machine Learning

They are all based on different ways to learn about the world around us through machine learning. This way, the learning styles they follow group together.

Supervised machine learning models

Classification

When you work with machine learning, you have to do something called “classification.” If you’re trying to model something, you need a lot of inputs and outputs so you can learn from them. This call “classification.”

The training dataset will use to figure out the best way to map input data to class labels. Training data have a large number of samples of each class label in order for it to be useful.

For example, it can use to filter out spam emails, and identify languages. Search for documents, analyze sentiments, read handwritten characters, and look for fraud.

These are some popular ways to classify:-

Logistic regression: Use it to classify binary data. It is a linear model that can use to do this

The K-Nearest Neighbors algorithm: A simple but time-consuming way to classify a new piece of data calls KNN. It looks for similarities between a new piece of data and other pieces.

Decision Tree: When you use the “If Else” principle, you can make a classifier that is more resistant to outliers you can find out more about decision trees by going to this page.

Support vector machines: A tool that can use to classify binary and multiclass data can find here.

Naive Bayes: A model called the Bayesian use to figure out what will happen next

Regression

Regression analysis is a way to use statistics to figure out how one or more independent variables link to a dependent (target) variable.

Regression analysis, in particular. Let us see how the value of the dependent variable changes in relation to an independent variable. While the other independent variables stay the same. We can see how the dependent variable changes. Temperature, age, salary, price, and other data that change over time predict.

In simple terms, regression is the “best guess” method for making a forecast from a set of data. How do you put points on a graph?

Regression jobs, like predicting the price of an aeroplane ticket, are very common. Today, we’ll look at some of the most common regression models that are in use today.

Linear regression: A simple regression model is best when the data is easy to separate and there is little or no multicollinearity.

Lasso regression: Straight regression with L2 regularization.

Ridge regression: Linear regression with regularization at L1 shows.

Support vector regression (SVR): based on the same ideas as the Support Vector Machine (SVM) for classifying. But with a few small changes to make it better.

Ensemble regression-: It wants to improve the accuracy of predictions in learning situations. There is a numerical target variable by combining many models.

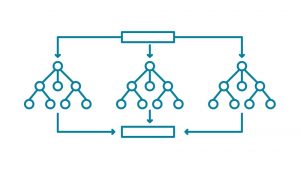

Unsupervised machine learning models

Deep Neural Networks

Neural networks are a group of algorithms that look for patterns and based on the human brain. These people use a kind of machine perception to figure out what is going on with their senses, grouping or categorizing raw data.

All real-world data, such as pictures, music, text, or time series, must turn into the patterns that computers recognize. These patterns are numerical and encoded in vectors. They must turn into the numerical patterns that computers can read and understand.

Data looks at by a deep neural network with representations that are like how people think about problems. In typical machine learning, the algorithm gives a list of characteristics to look at. In deep learning, the algorithm gives raw data and makes the features themselves.

If you want to learn about deep learning, you need to use multilayer neural networks. This is because deep learning has progressed along with the digital age, which has led to an avalanche of data from all over the world.

As a result, it could take years for humans to analyze and find useful information from the data. Companies are using AI systems to help people because they see the huge benefits of unlocking this huge amount of data.

Here are some of the most important deep learning models that use neural network architecture:

- Multi-Layer perceptron

- Convolution Neural Networks

- Recurrent Neural Networks

- Boltzmann machine

Clustering

Theoretically, this means grouping elements that are similar in some way. This approach aids in the automated identification of related items without the need for human interaction. Supervised machine learning models must train using labeled or curated data. That needs homogenous data, and clustering is a more efficient means of obtaining this data than other methods.

Association rule

Association rule learning is an unsupervised learning method. It looks at how one piece of data links to another piece of data and maps it to make it more profitable.

When it looks at the dataset, it tries to find interesting connections or relationships between the variables. If you look at a database with a set of rules, you can find interesting things about the variables.

Association rules use in Market Basket analysis, Web usage mining, continuous manufacturing, and many other things.

People who work for big stores use a method called market basket analysis to figure out what goods likes. To show how this works, we can think of a supermarket, where everything you buy put together.

Dimensionality reduction

There are a lot of variables or characteristics that can add to a dataset to make it bigger. It’s called “dimensionality reduction” when you use techniques to cut down on the number of variables in a dataset.

In predictive modeling, the curse of dimensionality refers to the fact. It helps more input characteristics make it more difficult to model when more add.

For data visualization, high-dimensionality statistics and methods. It reduces the number of dimensions that are often used. But, in real-world machine learning, the same techniques. It can use to reduce a classification or regression dataset. In that case a prediction model can be better trained.

Reinforcement machine learning problems

Markov Decision process

This is a problem that solves by a lot of different algorithms. When it comes to Reinforcement Learning. There is a certain type of problem that defines it, and all its solutions call Reinforcement Learning algorithms.

In this case, an agent must decide what to do base on his current situation. A Markov Decision Process use when this phase happens again and again. To talk about how to make decisions that happens over time.

Many real-world problems that MDPs use to solve have very large state and/or action spaces. It makes it hard to find practical solutions to the models they make.

Q learning

Q Learning is a significance algorithm for machine learning. The goal is to find the best value function for a given situation or problem. This calls a value function. The letter “Q” stands for quality, and it helps you figure out what the next step should be to get the best results. It’s a great place to start your real-life trip. People use a table called a Q Table to store the data. In Q, you get the future payoff of that action at that state (state, action).

This function can approximate by Q-Learning, which uses the Bellman equation to update Q over and over again (s,a). The agent will start to take advantage of the environment and act better when the Q-Table comes out.

Conclusion

We can say that these machine learning models play a big role in some important parts of our lives. They make up an ecosystem that tries to make our lives easier.

Machine models are the reason we can do huge things in a matter of seconds and live our lives without stress.

gralion torile

You are my inhalation, I possess few web logs and occasionally run out from to post .