Linear Algebra for machine learning and Data science.

Price: 24.99$

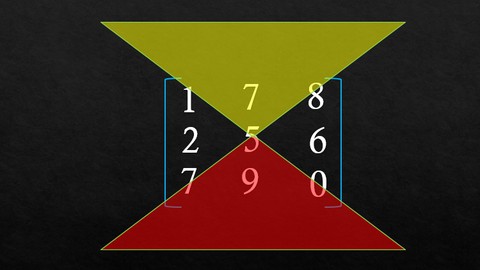

Why study linear algebra?Linear algebra is vital in multiple areas of science in general. Because linear equations are so easy to solve, practically every area of modern science contains models where equations are approximated by linear equations (using Taylor expansion arguments) and solving for the system helps the theory develop. Beginning to make a list wouldn’t even be relevant ; you and I have no idea how people abuse of the power of linear algebra to approximate solutions to equations. Since in most cases, solving equations is a synonym of solving a practical problem, this can be VERY useful. Just for this reason, linear algebra has a reason to exist, and it is enough reason for any scientific to know linear algebra. More specifically, in mathematics, linear algebra has, of course, its use in abstract algebra ; vector spaces arise in many different areas of algebra such as group theory, ring theory, module theory, representation theory, Galois theory, and much more. Understanding the tools of linear algebra gives one the ability to understand those theories better, and some theorems of linear algebra require also an understanding of those theories ; they are linked in many different intrinsic ways. Outside of algebra, a big part of analysis, called functional analysis, is actually the infinite-dimensional version of linear algebra. In infinite dimension, most of the finite-dimension theorems break down in a very interesting way ; some of our intuition is preserved, but most of it breaks down. Of course, none of the algebraic intuition goes away, but most of the analytic part does ; closed balls are never compact, norms are not always equivalent, and the structure of the space changes a lot depending on the norm you use. Hence even for someone studying analysis, understanding linear algebra is vital. In linear algebra, we will learn, Classification of matrices, Matrix algebra like addition, subtraction and multiplication, transpose of matrix, symmetric and skew symmetric matrix, Real and complex matrices, Determinant of Matrix: Minors, Cofactors of matrix, Inverse of matrix: adjoint of matrix, Finding the Rank of matrices, Row Echlon form, Relation between Rank and vectors of matrix, Linearly independant and dependant vectors, Vector space: Dimension, Basis, Span and Nullity, Solving system of linear Equations, Homogeneous and non homogeneous system of equation, Eigenvalues and their carrosponding Eigenvectors, Properties of eigenvalues and eigenvectors, cayley hamilton theorem.